In the fast-paced world of artificial intelligence, advancements and challenges are constantly emerging. Recently, Google made a decision to pause Gemini’s ability to generate AI images of people due to diversity errors. This move highlights the importance of addressing bias and diversity issues in AI technology.

The use of AI to generate images has great potential for various industries, from entertainment to marketing and beyond. Gemini, a neural network developed by Google, is designed to create lifelike images of people based on text descriptions. This innovative technology has been lauded for its ability to revolutionize content creation and personalization.

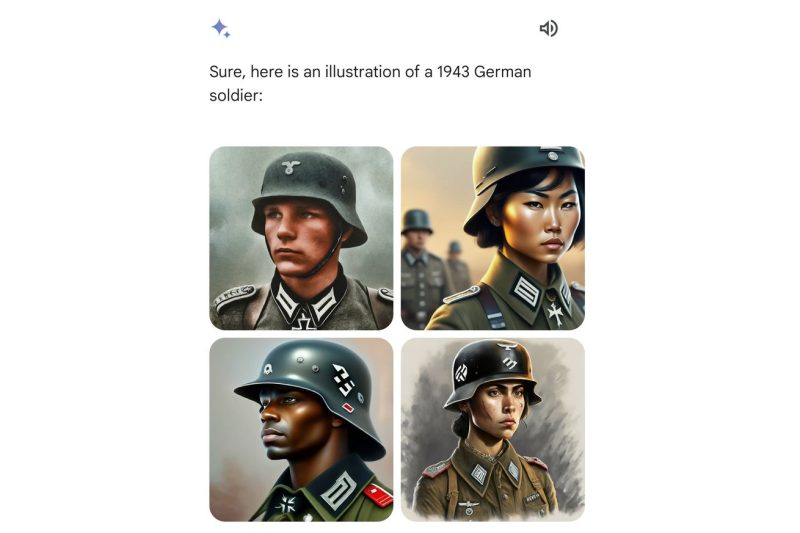

However, a crucial aspect that cannot be overlooked is the ethical implications of AI-generated content. In the case of Gemini, concerns arose regarding the diversity and representation of individuals in the images created. The technology was found to disproportionately favor certain characteristics over others, leading to biased outcomes.

Google’s decision to pause Gemini’s capability to generate AI images of people demonstrates a commitment to addressing these diversity errors. This proactive step acknowledges the importance of ensuring fair and accurate representation in AI technology. By pausing the system and reevaluating its algorithms, Google is taking a responsible approach to rectifying the issue.

The incident with Gemini serves as a reminder of the inherent challenges in developing AI technologies. Bias and discrimination can easily permeate algorithms, reflecting and perpetuating societal inequalities. It is crucial for developers and companies to prioritize diversity and inclusivity in AI design to mitigate such risks.

Moving forward, efforts must be made to improve diversity and representation in AI systems. This includes diversifying datasets, enhancing algorithmic transparency, and implementing stringent standards for evaluating biases. By fostering a culture of inclusivity in AI development, we can create more equitable and ethical technologies for the future.

In conclusion, Google’s decision to pause Gemini’s ability to generate AI images of people after diversity errors underscores the importance of addressing bias and diversity in AI technology. By taking proactive steps to rectify the issue, Google sets a positive example for the industry. Moving forward, prioritizing diversity and inclusivity in AI development is essential to creating fair and ethical technologies that benefit society as a whole.