OpenAI has unveiled robust new parental controls for its flagship chatbot, ChatGPT, marking a pivotal move toward protecting teenagers online.

The initiative comes against the backdrop of mounting concern over AI’s influence on youth, especially after a high-profile lawsuit alleged ChatGPT played a role in a teen’s suicide.

Parents, educators, and regulators have been vocal about the need for tech companies to do more to shield young users from explicit content and mental health risks.

With AI-powered chatbots becoming ever more integrated into teens’ digital lives, OpenAI’s latest measures are both timely and highly scrutinized.

The new controls aim to give parents meaningful oversight of how their children interact with ChatGPT, while signaling OpenAI’s intention to balance innovation, safety, and privacy in an era of growing digital complexity.

What OpenAI’s parental controls do?

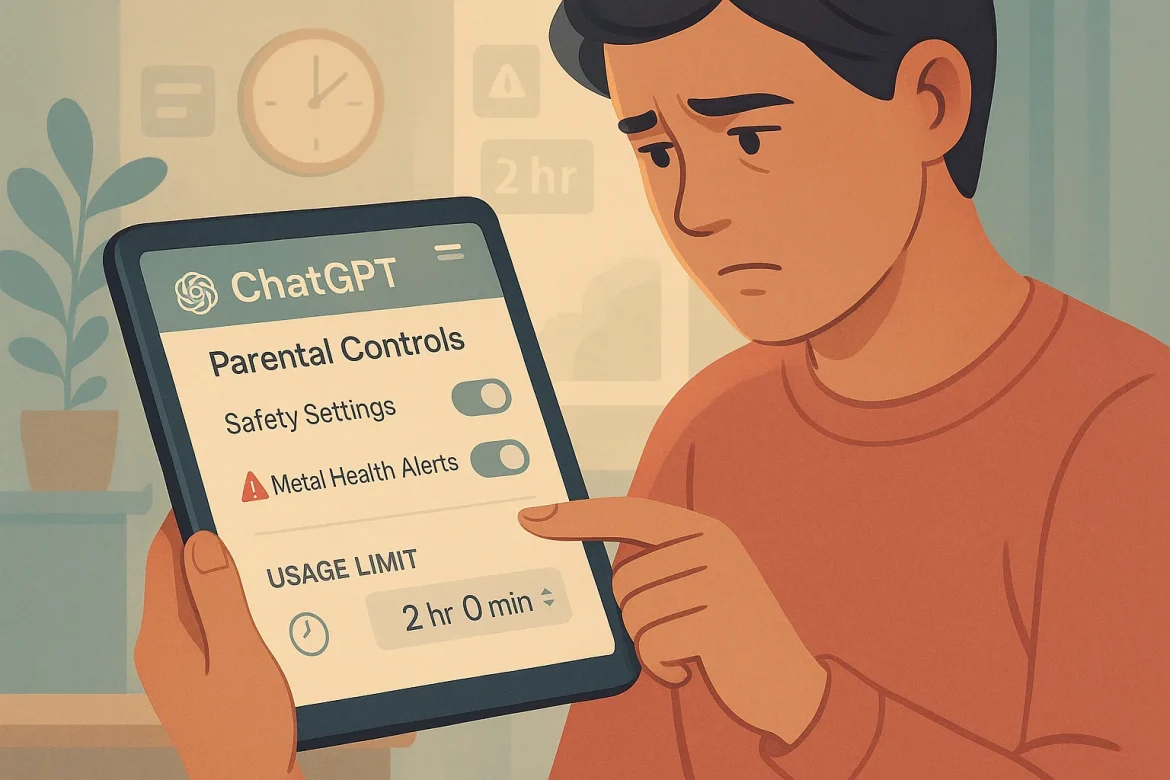

The new parental controls let parents actively guide their teenager’s experience on ChatGPT through a suite of options.

Parents can now link their accounts with their teens’ via a simple invitation, making it possible to control which features are accessible, when the chatbot can be used, and even how the AI responds to particularly sensitive prompts.

Notably, parents can set “blackout windows,” blocking ChatGPT usage during specified periods such as bedtime or study hours, and they can disable memory and chat history features for added privacy.

Crucially, OpenAI’s safety protocols have expanded to include real-time alerts for parents if a teen’s conversation suggests emotional distress or self-harm, although actual chat transcripts are not shared for privacy reasons.

In such cases of acute crisis, OpenAI may involve both a human moderator and, when needed, law enforcement.

Additional steps include restricting explicit and sexual content for users under 18, and soon, the company expects to implement improved age verification as well as predictive age modeling to further shield minors.

OpenAI says these controls are the result of months of consultation with child safety advocates, mental health professionals, and privacy experts, and that their approach will continue to evolve as new risks emerge in the rapidly changing AI landscape.

A reckoning on child safety, tech, and teen wellbeing

The stakes for these parental controls extend far beyond ChatGPT, touching on a growing societal reckoning with technology’s impact on children’s wellbeing.

Recent years have seen an alarming rise in reports of teen anxiety, cyberbullying, and even suicide linked to unfiltered online experiences.

The tragic suicide of 16-year-old Adam Raine, for example, prompted a wave of scrutiny, and ultimately the lawsuit that fast-tracked OpenAI’s response.

Parents and lawmakers alike argue that tech companies must play an active role in protecting vulnerable minors, especially as AI begins to assume roles in companionship and counseling.

Parental controls such as these are regarded as a necessary first step, but policy advocates warn that they are no substitute for broader support systems: mental health resources, family communication, and ethical standards in tech design.

OpenAI openly admits these tools are just the beginning and says it will keep refining safeguards, guided by expert input and real-world outcomes.

As “AI natives” come of age, the debate over safety and autonomy will only intensify.

For now, OpenAI’s rollout signals industry acknowledgment that with great power comes great responsibility, a lesson the digital age is still learning, sometimes at great cost.

The post Explained: OpenAI’s new parental controls for ChatGPT and what they do appeared first on Invezz